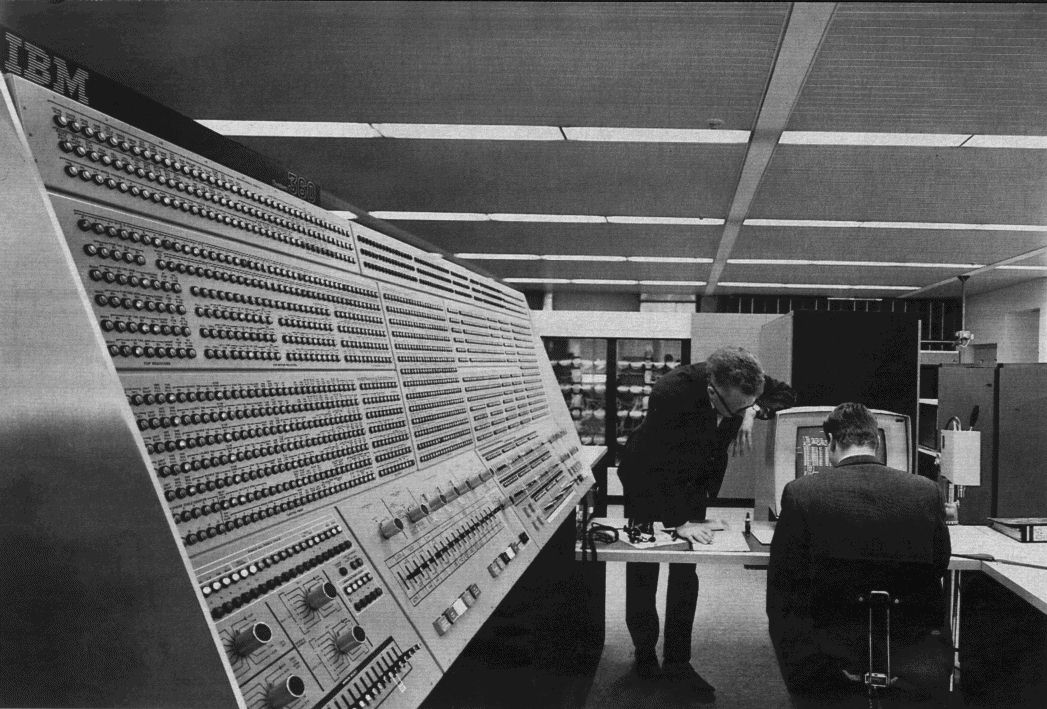

The great digital continent had been formed. The ARPANET had grown into the internet, a sprawling, interconnected nervous system linking machines and minds across the globe. The roads were built, the traffic was flowing, but the question that hung in the air was the one we were left with: what would this new global brain have to say? For decades, the two great tribes of AI had been fighting over the nature of the machine's soul. Was it a logician, operating on the clean, crisp rules of symbolic reason? Or was it a learner, a neural network that slowly built an intuition about the world from the bottom up?

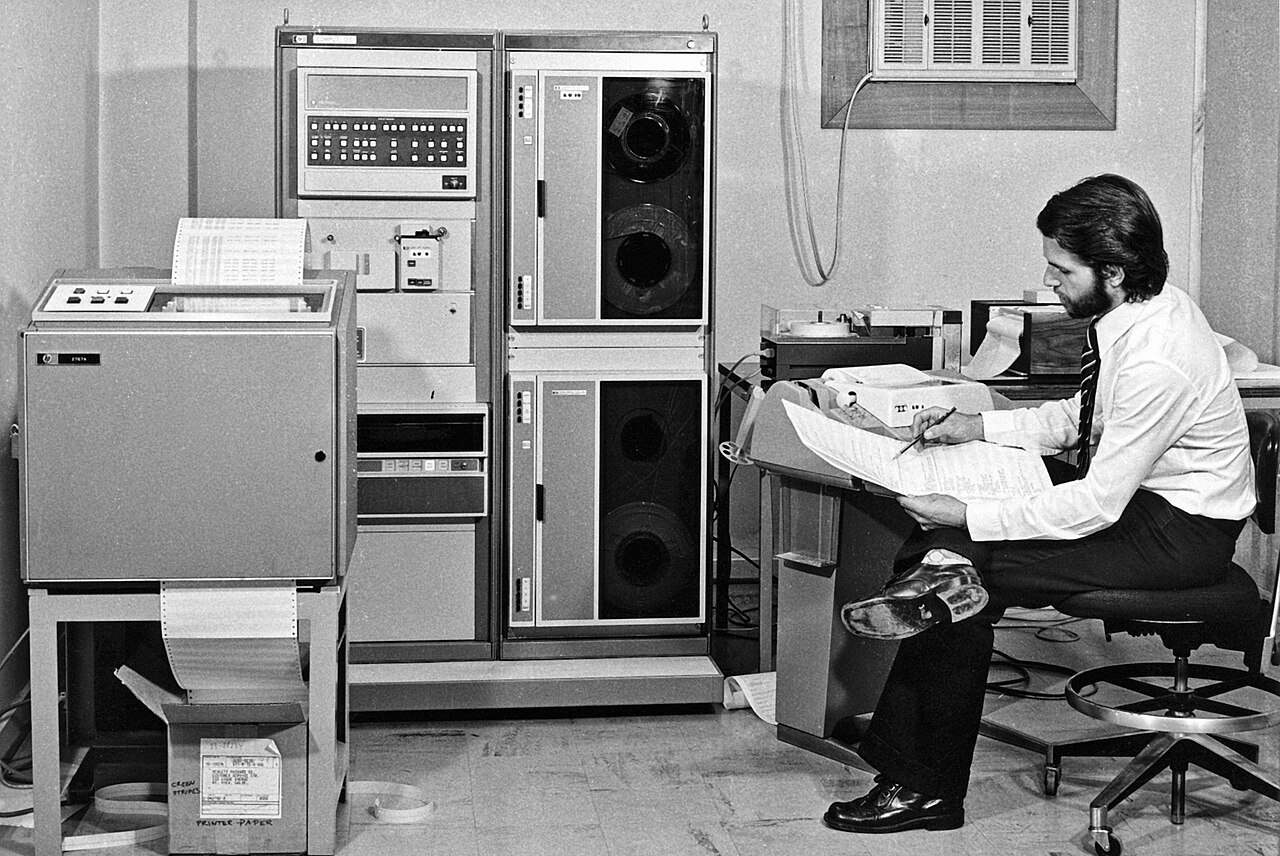

For a long time, the learners—the descendants of Geoffrey Hinton's work—had been making slow but steady progress. They were getting remarkably good at recognizing things. They could look at a million pictures and learn to identify a cat, or listen to a thousand hours of audio and learn to transcribe the spoken word. This was a powerful form of intelligence, but it was a passive, receptive intelligence. It could label the world, but it couldn't add to it. The ghost in the machine could see and hear, but it was still mute.

The transformation, when it came, was not the result of a single discovery, but the violent convergence of three mighty rivers. The first was data. The internet had become a colossal, planetary-scale library of human expression—trillions of words, billions of images, an archive of nearly everything our species had ever written, said, or created. For a learning machine, this was the ultimate textbook.

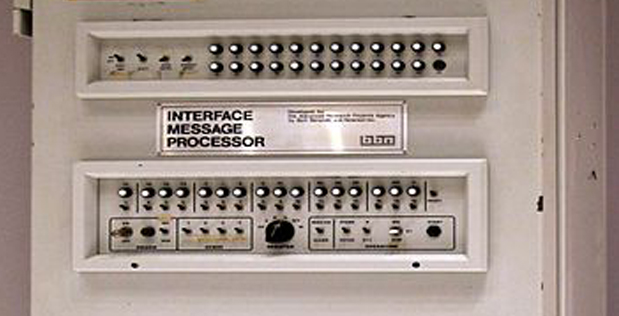

The second was power. The relentless march of Moore's Law, supercharged by the development of specialized chips called GPUs that were perfect for the parallel computations neural networks required, finally gave Hinton's ideas the raw horsepower they had always needed.¹

The third, and most important, was a new idea. In 2017, a team of researchers at Google published a paper with a deceptively simple title: "Attention Is All You Need."² It introduced a new neural network architecture called the "Transformer." The technical details were complex, but the concept was revolutionary. It gave a machine the ability to weigh the importance of every other word in a long passage of text when considering the meaning of the next word. It could understand context, nuance, and long-range dependencies in a way no machine had ever been able to before.

This combination—planetary data, superhuman speed, and the Transformer architecture—created a seismic shift. The neural networks became so vast, trained on so much of the human library, that they crossed a threshold. They became so good at predicting the next word in a sentence that they could do more than just predict. They could generate. You could give them a prompt, and they could continue it, writing paragraphs, poems, and computer code with astonishing coherence.

They were no longer just recognizing the world; they were creating new parts of it. This was the moment that the dream of Ada Lovelace, the vision of a "poetical science" where a machine might one day compose elaborate music, was finally made real.³ These Large Language Models, or LLMs, were the first true evidence that the ghost in the machine could be a collaborator, an agent, a creator.

The result was an intelligence that was both brilliant and alien. It had read the library of humanity but had never lived a single moment. It could explain the joy of love or the bitterness of grief, but it had never felt either. It was a vast, disembodied mind, an oracle living in the digital ether, accessible only through the glowing rectangles of our screens. The intelligence was now conversational. But it was still a ghost. To take the next step in its evolution, it had to find a way out of the machine. It needed senses. It needed to connect to the real world.

Citations:

¹ The Economist. "The hardware lottery." The Economist, 13 Sept. 2018. An article explaining how the rise of GPUs for gaming created the computational foundation for the deep learning revolution. Available to read online.

² Vaswani, Ashish, et al. "Attention Is All You Need." Advances in Neural Information Processing Systems 30 (NIPS 2017). The foundational paper that introduced the Transformer architecture. Available to read via the official NIPS proceedings and on arXiv.

³ Toole, Betty A. Ada, the Enchantress of Numbers: Poetical Science. Strawberry Press, 1998. This biography contains Lovelace's famous "Note G" from her translation of Menabrea's work, where she speculates on the creative potential of the Analytical Engine. Available at major booksellers.

.jpg)

.png)

_School_-_Charles_Babbage_(1792%E2%80%931871)_-_814168_-_National_Trust.jpg)