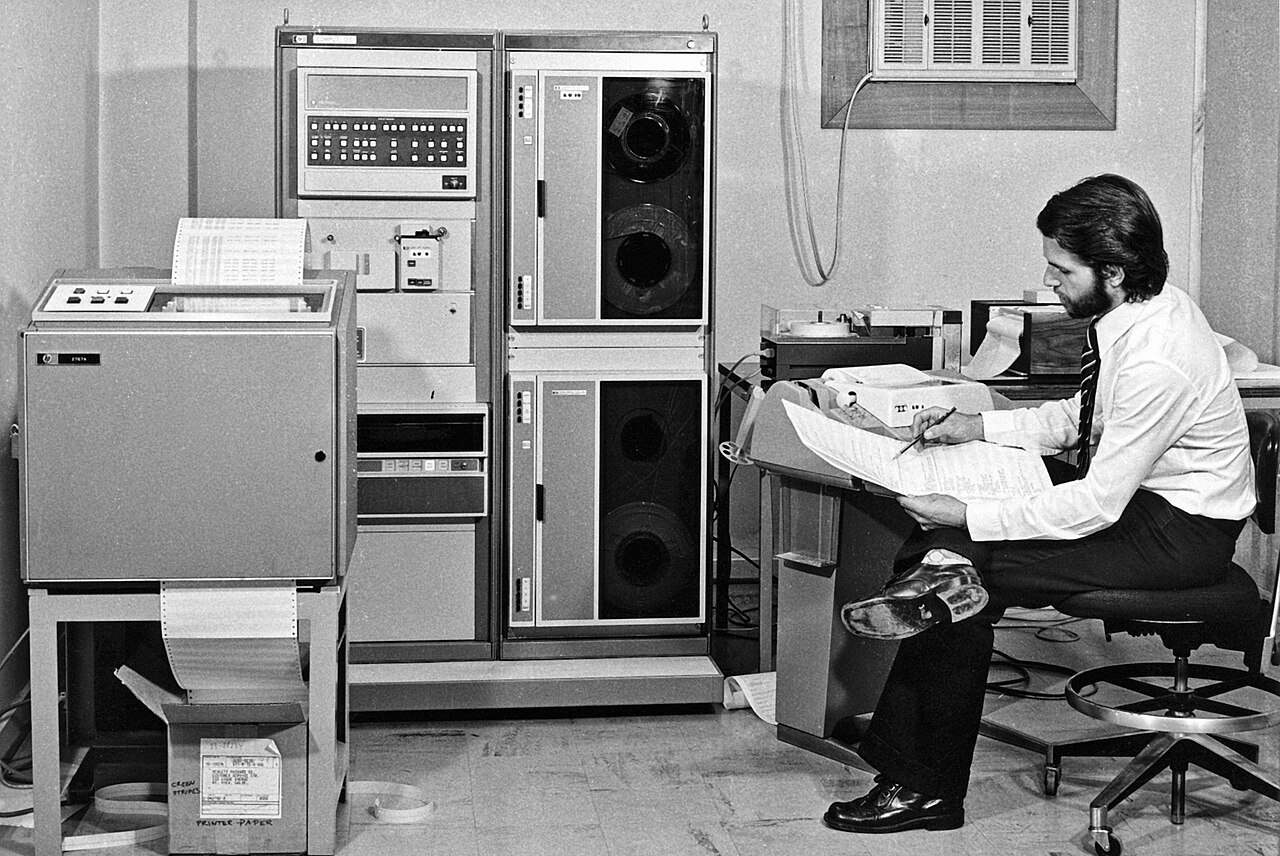

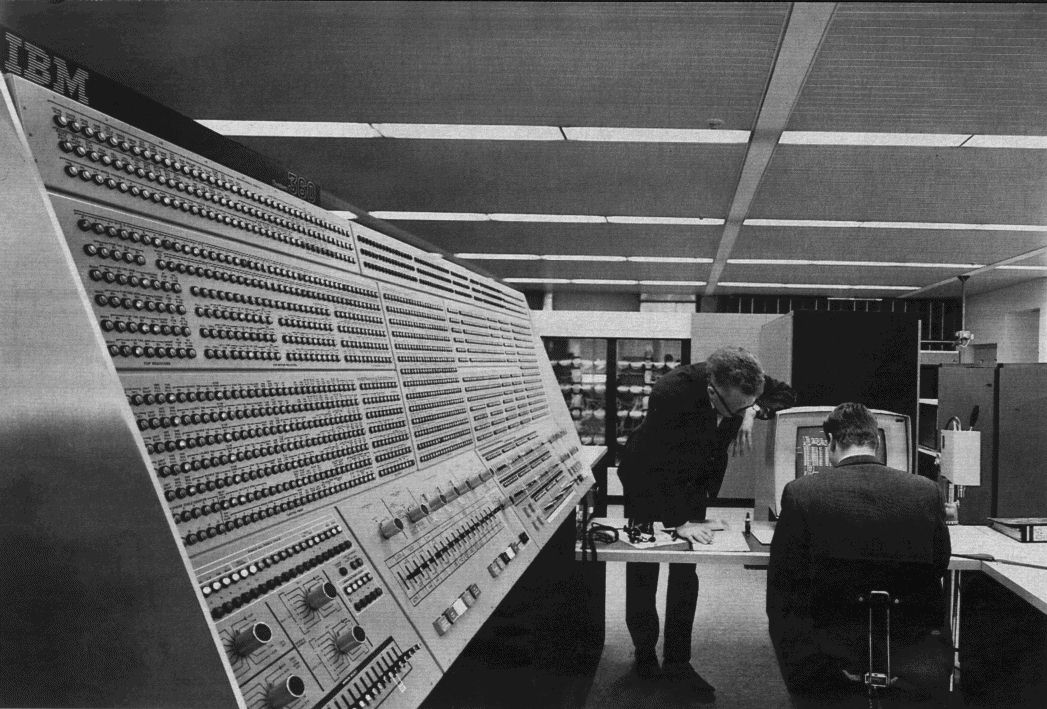

The house of Symbolic AI was a magnificent creation. Built on the solid foundation of IBM's System/360, it was a palace of pure reason, a top-down world where intelligence was a set of rules to be programmed. For a time, it seemed like the only path forward. But as the years went on, the cracks began to show. For all its power in the orderly worlds of chess and logic, Symbolic AI was utterly helpless in the messy, unpredictable world of reality. It couldn't reliably recognize a cat in a photo, understand a simple spoken sentence, or learn from experience. The ghost in the machine was still just a logician; it had no common sense, no intuition.

To find that missing piece, the story had to abandon the clean attic of logic and descend into the chaotic basement of the brain. The idea was not new. In the very earliest days of AI, a psychologist named Frank Rosenblatt had been inspired by the structure of the brain to create something he called the "Perceptron." It was an "artificial neural network," a crude model of a single neuron that could learn to recognize simple patterns.¹ For a brief, shining moment in the late 1950s, it seemed like the future. But the dream died quickly. In 1969, a book by AI pioneers Marvin Minsky and Seymour Papert ruthlessly exposed the severe limitations of Rosenblatt's simple model, and the entire field of neural networks was cast out into the academic wilderness.² Research funding dried up. The idea of building a brain-like machine became a career-ending heresy.

For nearly two decades, the symbolic school reigned supreme. But a few true believers kept the faith, working in obscurity, convinced that the path to true intelligence lay in connected, learning networks, not rigid rules. Their prophet was a British-born psychologist and computer scientist named Geoffrey Hinton.

Hinton was a man possessed by a single, powerful idea: that intelligence wasn't programmed, it was learned. He believed that the secret wasn't to hand-craft rules from the top down, but to create a network of simple, connected nodes—like the neurons in a brain—and allow it to learn from the bottom up, by seeing examples and adjusting its own internal connections.³

In 1986, working with David Rumelhart and Ronald Williams, Hinton co-authored a groundbreaking paper that gave this old idea a powerful new engine. They introduced a mathematical technique called "backpropagation."⁴ The details are complex, but the idea is beautiful: when a neural network makes a mistake, backpropagation allows the error to be sent backward through the network, giving each connection a tiny nudge in the right direction. Do this millions of times with millions of examples, and the network slowly, painstakingly, learns. It organizes itself. It begins to see the patterns in the data without ever being told the rules.

This was the birth of modern machine learning. It was the complete opposite of the symbolic approach. You didn't tell the machine how to find a cat in a photo; you showed it a million photos of cats and let it figure out for itself what a "cat" looked like. This was a machine that could learn from experience, that could deal with the messy, statistical nature of the real world.

For many years, this idea remained a niche part of AI research. The computers of the day were simply not powerful enough to train these networks on a meaningful scale. But Hinton and his small band of followers persisted. They developed ways to make the networks "deeper," with more layers of neurons, allowing them to learn more complex patterns. This was the beginning of "deep learning."

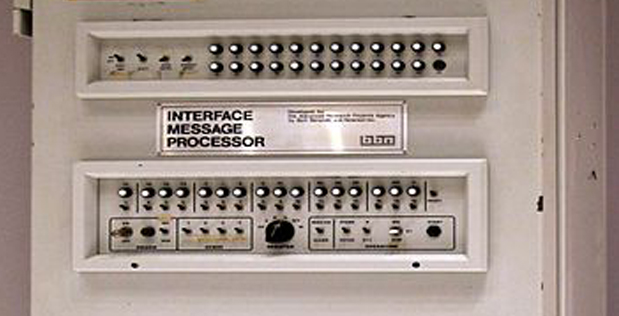

The world of Symbolic AI had created a stable, logical platform. The hardware was ready. But the software, the very idea of intelligence, was now split in two. Was the mind a logical theorem-prover, or was it a self-organizing network of neurons? For decades, these two visions would be at war. But before that war could be decided, another, even more fundamental revolution had to happen. The power of the computer, whether it was a logician or a learner, was still trapped in the hands of a small priesthood of experts. Before AI could truly change the world, someone had to give the computer a voice that everyone could understand.

.png)

.jpg)

_School_-_Charles_Babbage_(1792%E2%80%931871)_-_814168_-_National_Trust.jpg)